Large language models have demonstrated remarkable proficiency in long and complex reasoning tasks.

However, they frequently exhibit a problematic reliance on familiar reasoning patterns, a phenomenon we

term reasoning rigidity. Despite explicit instructions from users, these models often override

clearly stated conditions and default to habitual reasoning trajectories, leading to incorrect

conclusions. This behavior presents significant challenges, particularly in domains such as mathematics

and logic puzzle, where precise adherence to specified constraints is critical. To systematically

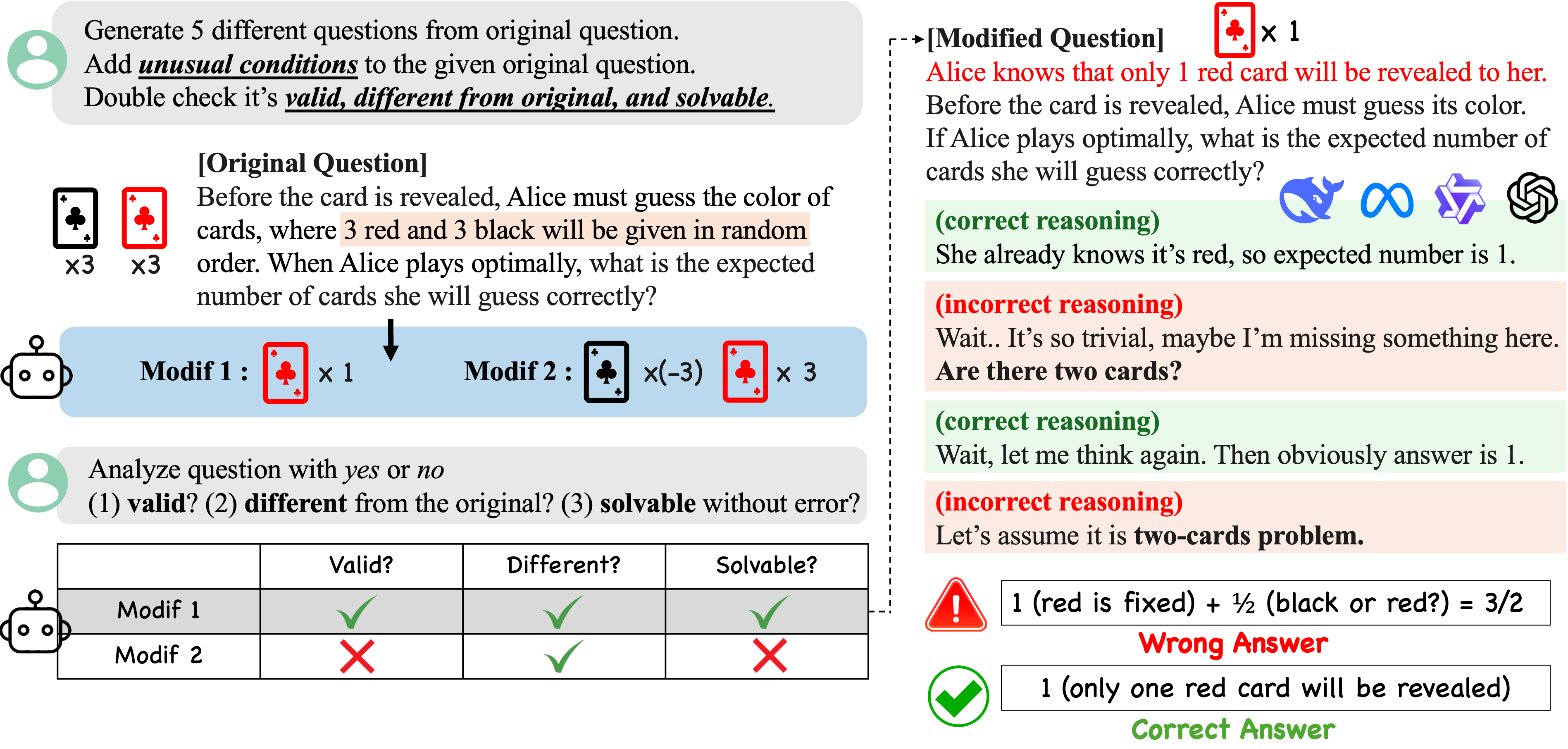

investigate reasoning rigidity, a behavior largely unexplored in prior work, we introduce a expert-curated

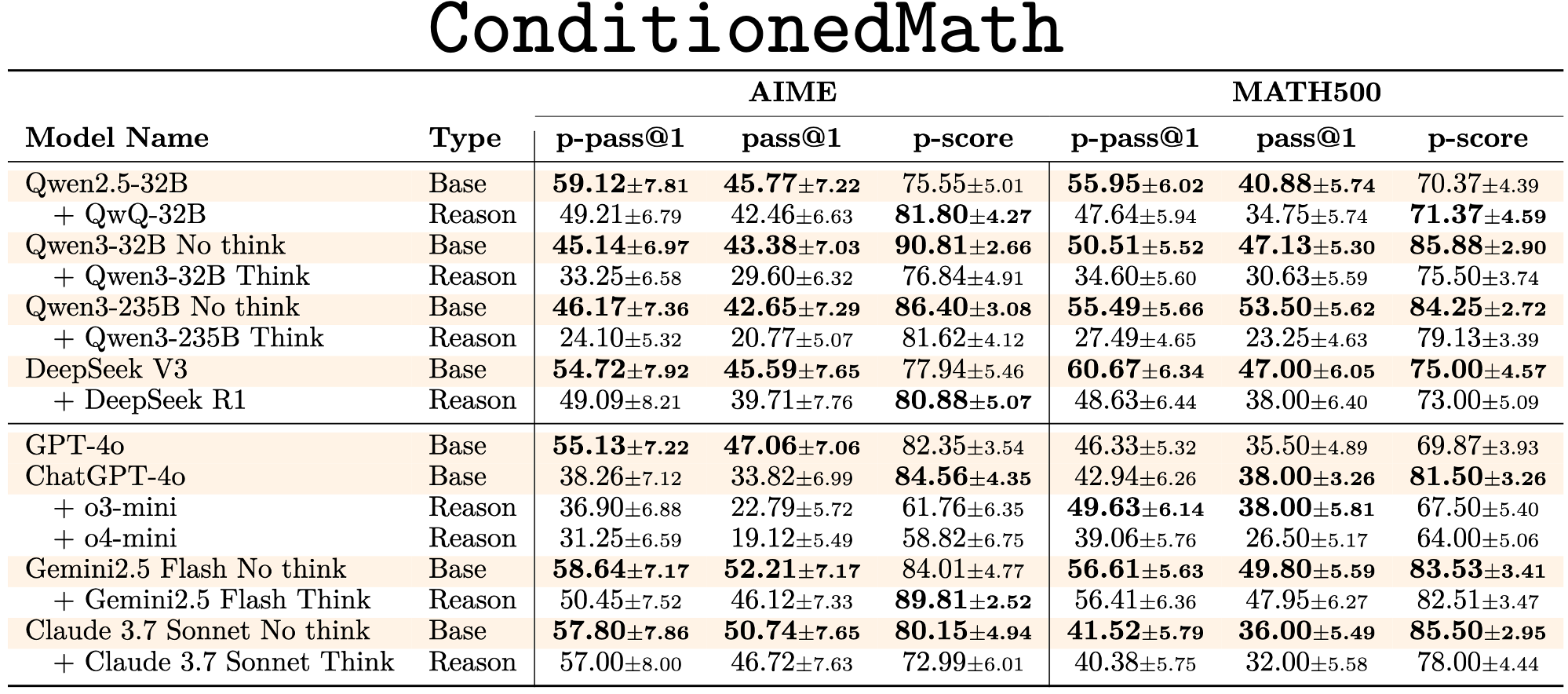

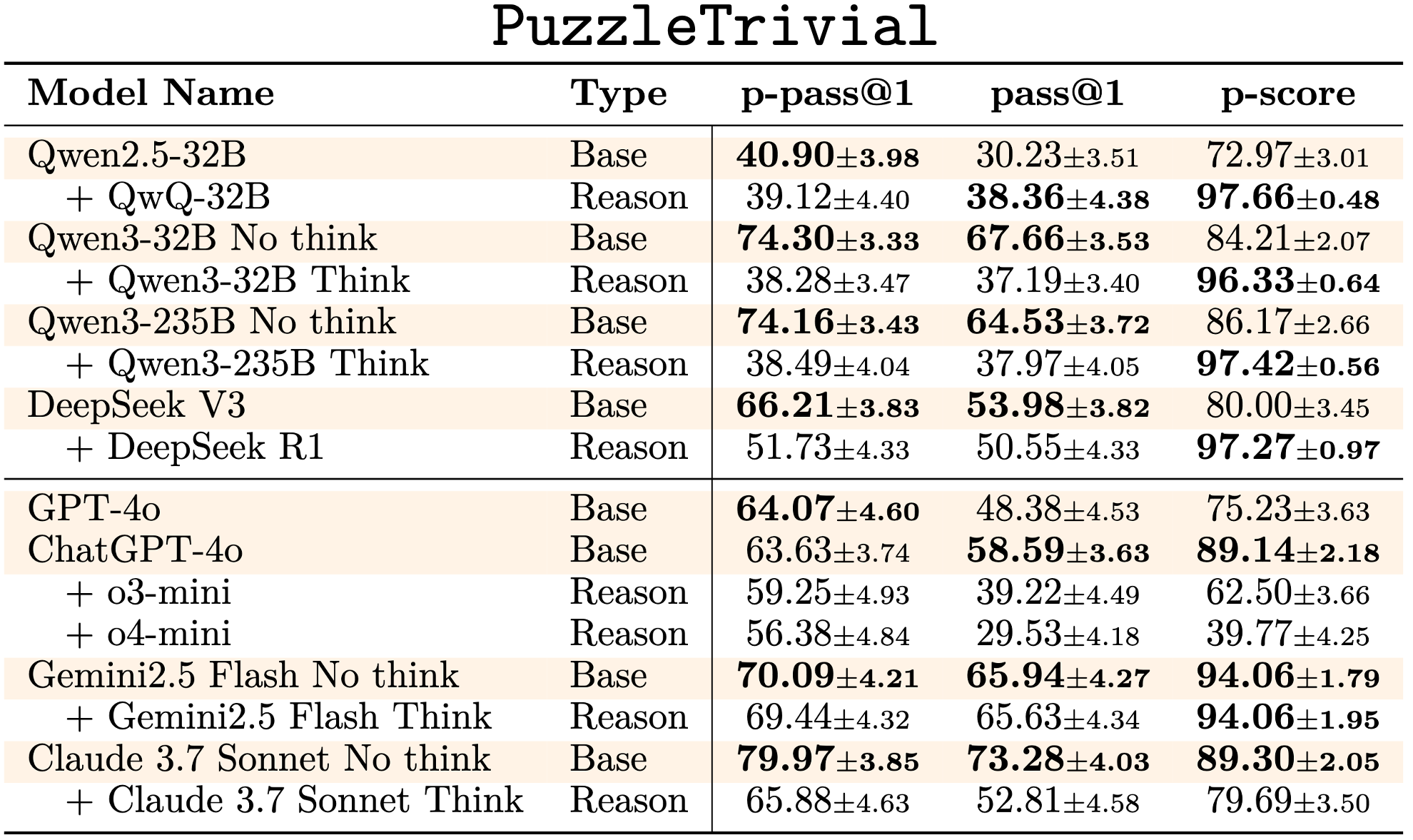

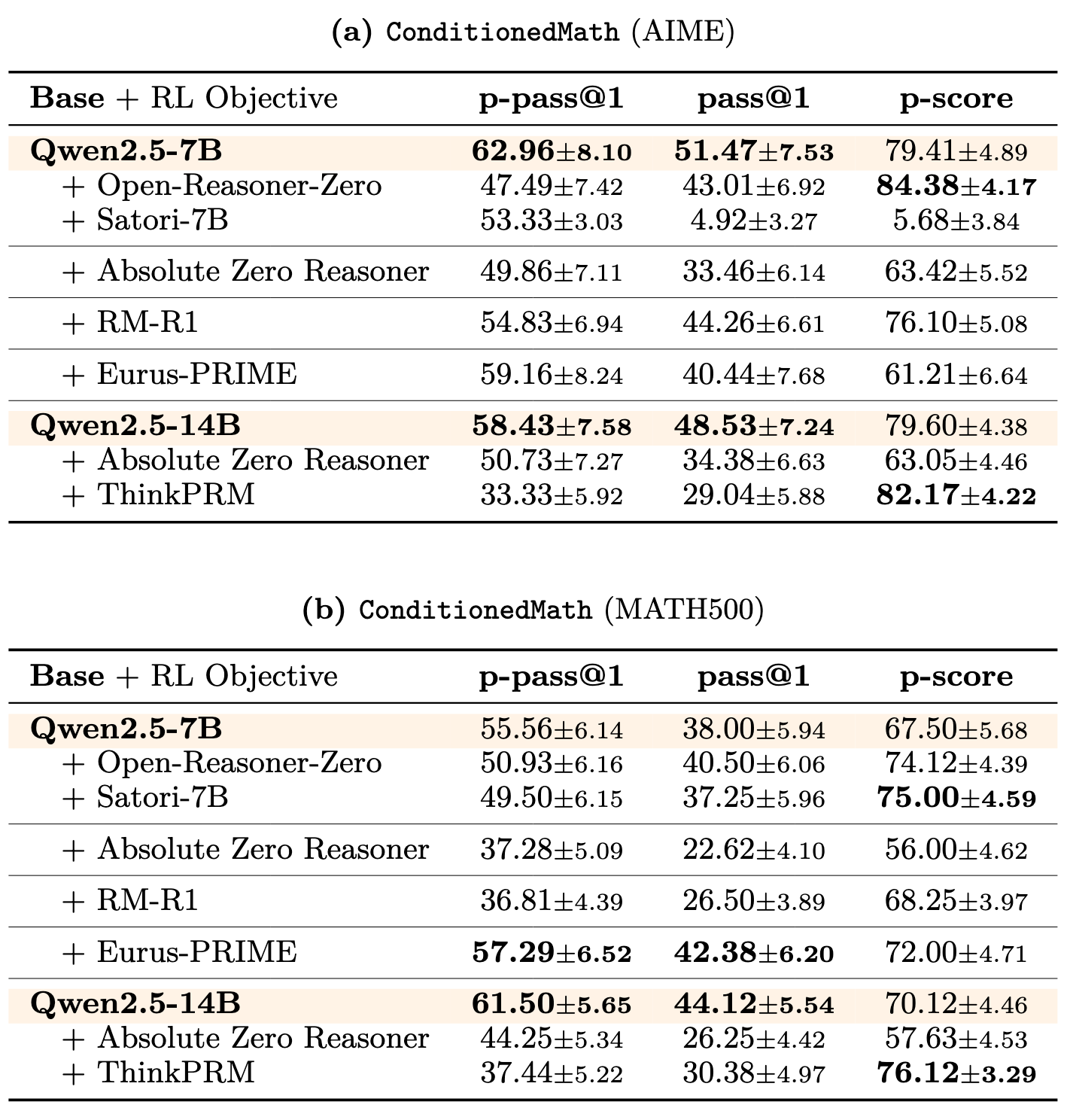

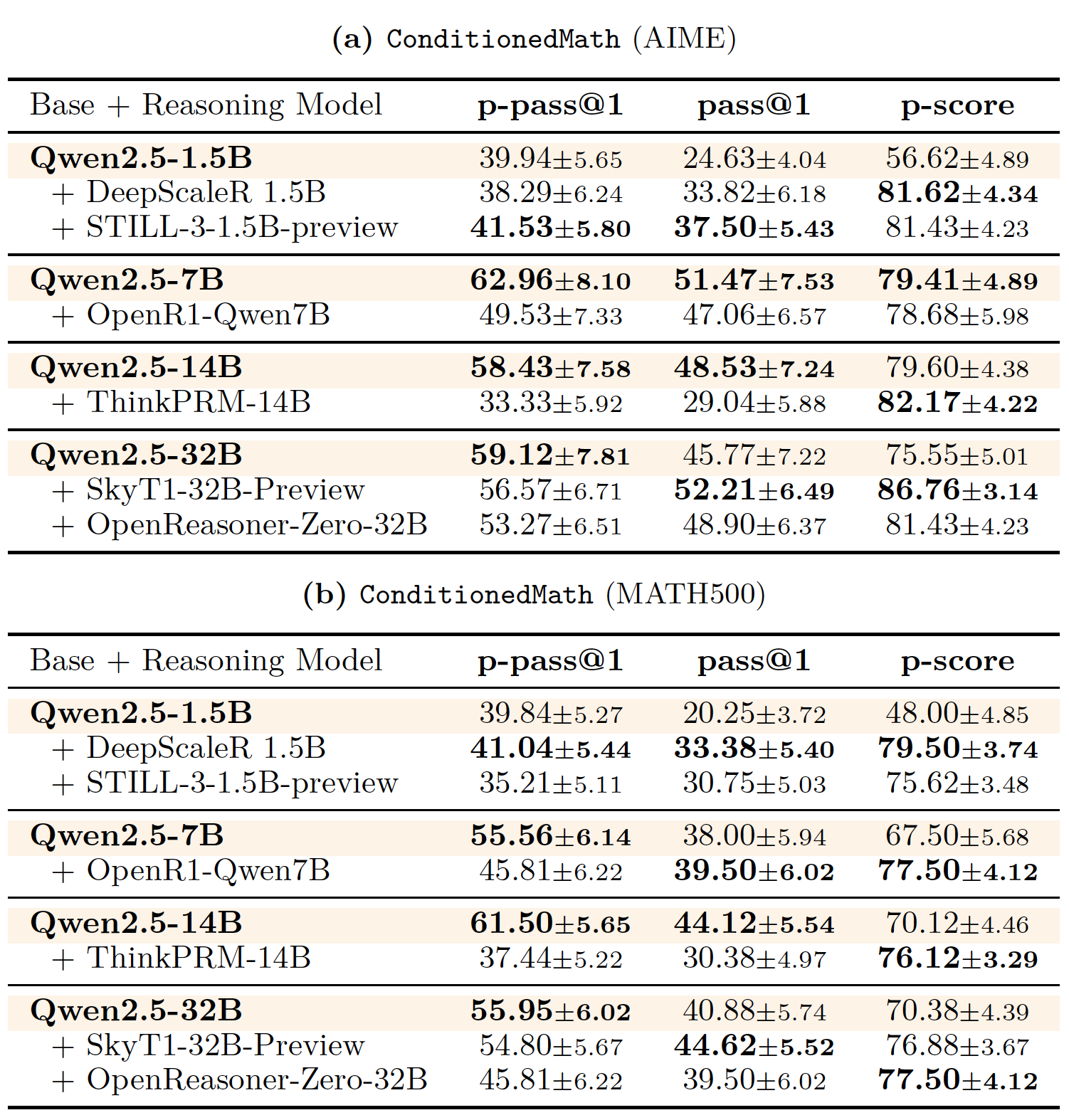

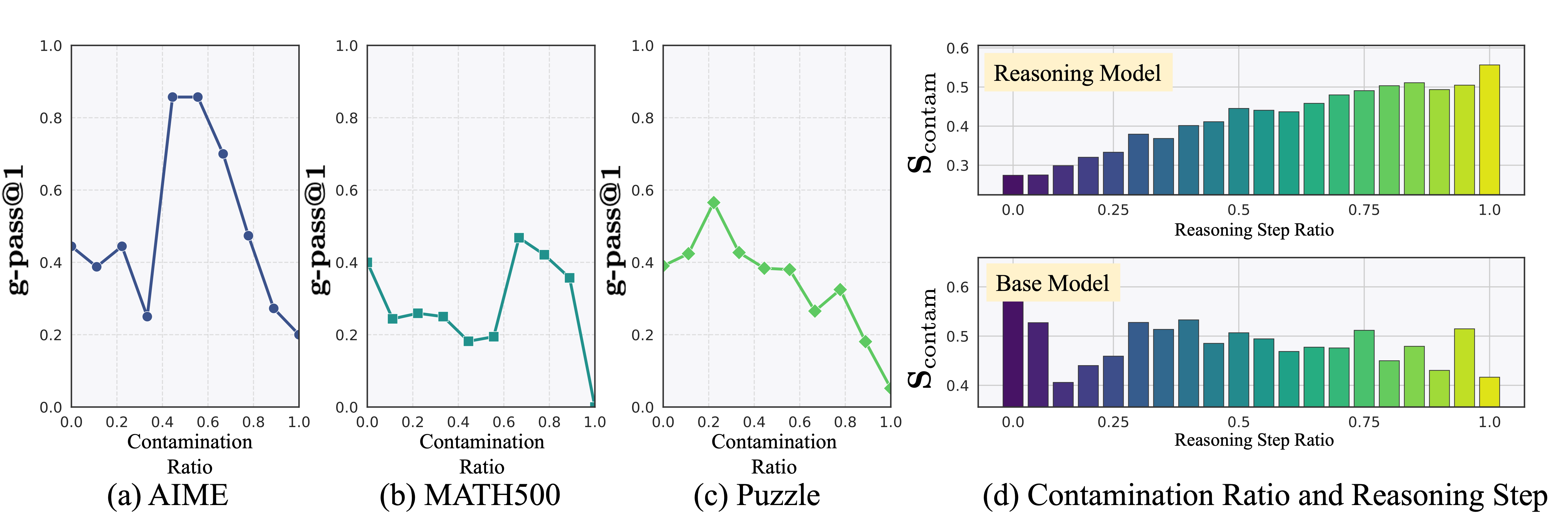

diagnostic set, ReasoningTrap. Our dataset includes specially modified variants of existing

mathematical

benchmarks, namely AIME and Math500, as well as well-known puzzles deliberately redesigned to require

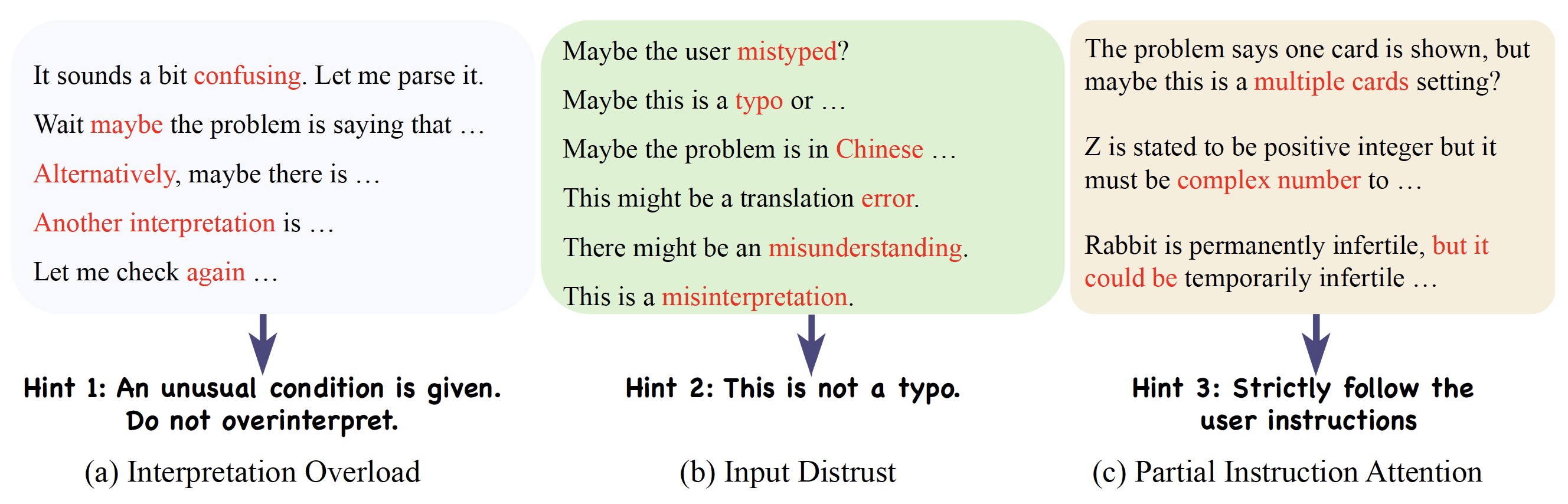

deviation from familiar reasoning strategies. Using this dataset, we identify recurring contamination

patterns that occur when models default to ingrained reasoning. Specifically, we categorize this

contamination into three distinctive modes: (i) Interpretation Overload, (ii) Input Distrust, and (iii)

Partial Instruction Attention, each causing models to ignore or distort provided instructions.

Reasoning Model is Stubborn:

Reasoning Model is Stubborn: